SignAttention: On the Interpretability of Transformer Models for Sign Language Translation

Conference paper

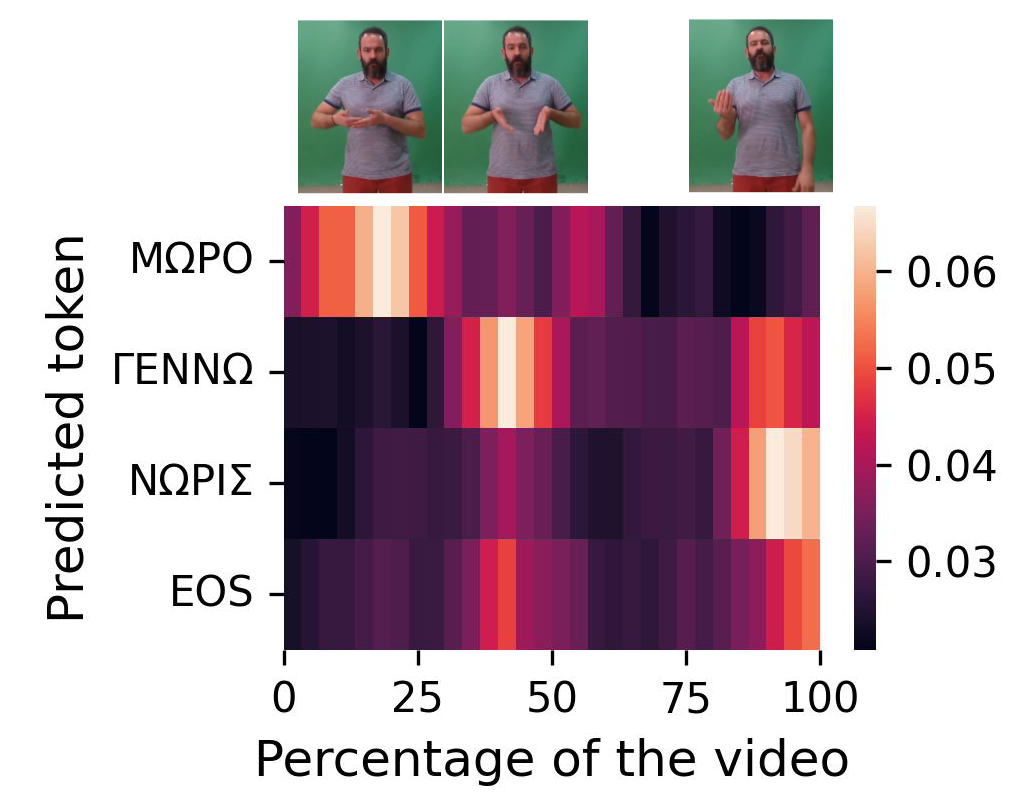

This paper studies a Transformer-based model for translating Greek Sign Language to glosses and text. It finds that the model focuses on clusters of frames, with alignment weakening as glosses increase, and shifts from video frames to predicted tokens during translation. This work enhances understanding of SLT models and promotes more transparent translation systems.